Master prompt crafting strategies such as refining prompts through iteration for better interactions. Discover practical tips and techniques to enhance your communication skills.

January 16, 2025 – Reading Time: 15 minutes

If you missed earlier lessons of this course, you can find it and all of the lessons in this series on the main Learn Prompt Engineering Basics page.

Day 4—Learn to Prompt: Refining Prompts Through Iteration

Problem or Pain Point

If you’ve been following my series on prompt engineering, you already know how crucial it is to craft effective prompts that guide AI to give us the best possible replies. But here’s the thing: many people stop at the first attempt. They type in a question or statement, take whatever they get back, and move on.

That’s a huge missed opportunity.

In reality, writing prompts for AI is an iterative process that evolves through enhancing AI’s response. It’s akin to going to a tailor to get a suit or a dress made—you expect several rounds of measurement and adjustments to reach a perfect fit. The same holds for prompt engineering. Whether using AI for small business solutions or delving into AI automation to speed up your workflows, the real problem isn’t just poorly written prompts. It’s the reluctance to iterate and enhance prompts until the output is exactly what you need.

Every day, I talk to small business owners who are new to AI. They’re sometimes frustrated by vague or irrelevant answers. This is especially critical for entrepreneurs who are juggling a million tasks. That’s why refining your prompts step by step is essential.

Opportunity or Outcome

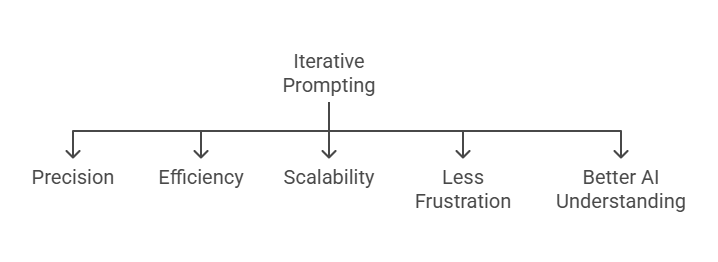

Before diving into the benefits of iteration, it’s essential to recognize how often a single adjustment can dramatically improve results. When you do take the extra time to iterate, you’ll notice a few significant changes in your work:

- Precision in Responses—The more you perfect your initial prompts, the more precise and targeted the output will become.

- Better Understanding of AI – Iteration helps you see how AI replies to different phrases, constraints, and clarifications. Over time, you’ll develop an intuitive sense of how to steer AI toward helpful or creative answers.

- Improved Efficiency – Fewer wasted hours sifting through irrelevant answers means you can dedicate more time to what matters in your business.

- Scalability for AI Automation – If you’re plan to use AI for small business needs, especially repetitive tasks, honing your prompts upfront can pave the way for smoother AI automation. A well-structured prompt can often become the template for a recurring process, saving you even more time and effort.

- Reduced Frustration—When you iterate, you’ll discover new ways to get better results, lessening frustration and boosting your confidence in the technology.

These outcomes point to a single conclusion: iterative prompt refinement isn’t a nicety—it’s a necessity. If you’re serious about leveraging AI effectively, mainly if you’re relying on AI for small business solutions, you have to adopt an iterative mindset. Let’s explore how you can make that happen.

The Step-by-Step Guide to Iterative Prompt Engineering

1. Start Simple

When you first begin, try drafting a simple, broad prompt. For example, you might start with: “Describe the concept of machine learning.” This is a straightforward request and will likely yield a reasonably generic reply. That’s okay—because this isn’t where your prompt engineering journey ends. This broad question serves as a baseline. By reading through the replies you get, you’ll identify what’s missing, what’s off-target, or what could be improved.

As we discussed in lesson 2, few-shot prompting is a technique in which you provide the AI model with a limited number of real instances, typically between 5 and 10, to help it adapt to a new task or genre. This method enhances the model’s ability to infer patterns and delivers high-quality output with minimal input.

- Identify Gaps: Is the answer too detailed or too broad? Does it use too much jargon? Are there specific points it misses entirely?

- Check for Relevancy: Does it address the topic from the angle you find most valuable (e.g., are you focusing on small businesses, advanced developers, or a general audience)?

This is your starting point. Don’t expect it to be perfect.

2. Refine the Prompt

With the initial response, you’ll now adjust your prompt to fill the gaps or correct the issues you noticed. Let’s say the first machine learning reply was too technical, and you want it to be understandable by a 10-year-old. You might upgrade your prompt to: “Explain the concept of machine learning to a 10-year-old using a real-world example.” Notice what changed:

- Clarity in Audience: You specify that the explanation should be tailored to a 10-year-old.

- Context or Format: You’re asking for a real-world example, guiding the AI to provide a tangible illustration.

In context, learning is an emergent property of large language models that allows them to learn from prompts temporarily. This type of learning enhances performance based on model scale and does not retain biases from previous interactions, making it distinct from static forms of training like fine-tuning. It is particularly relevant when fine-tuning prompts, as it helps the AI adapt and improve responses dynamically.

By doing this, you’re telling the AI: “Simplify it, and give me something relatable.” That’s the crux of iterative prompting—taking an initial reply and evolving it toward a more pinpointed goal.

3. Adjust Constraints and Instructions

Sometimes, you need more than just a more straightforward explanation. Maybe you’d like the reply to be shorter or to include bullet points. You can add these constraints directly into your prompt. For instance: “Explain the concept of machine learning to a 10-year-old, using a real-world example, in 150 words or less, and use bullet points where possible.”

Understanding context windows is crucial in maintaining continuity in AI responses. As context windows increase, AI models can better remember previous interactions, enhancing the potential for generating output that aligns with ongoing discussions.

Imagine you need AI to draft a quick social media post for a bakery. Instead of a general prompt like “Write a post about cupcakes,” you could refine it to: “Write a 150-word promotional post for a bakery featuring cupcakes, including a call-to-action and using bullet points for key highlights.” You can shape the answer further by explicitly telling the AI the length limit and the structure you want (bullet points). This approach is extremely valuable in AI automation, where a standardized format might be required for multiple tasks or applications (like automatically generating blog outlines, social media posts, or product descriptions).

4. Test and Tweak Repeatedly

You’ll rarely achieve a perfectly refined prompt on the second try—sometimes, it can take multiple iterations, building upon previous actions. Suppose your goal is to demonstrate expertise in AI for small business applications, for example. In that case, you might keep layering in instructions: “Explain the concept of machine learning to a 10-year-old, using a real-world case related to how a small bakery might predict the demand for cupcakes each day, in 150 words or less, using bullet points.” Now you’ve added another layer: you want a small business illustration, specifically a bakery. With each iteration, you’re getting closer to your desired outcome. In real-world applications—especially for entrepreneurs—the better your prompt, the faster you reach an output you can use.

Understanding Prompt Iteration in Natural Language Processing

Prompt iteration is a cornerstone of natural language processing (NLP), a subfield of artificial intelligence that focuses on the interaction between computers and humans using natural language. This iterative process involves creating initial prompts, evaluating their effectiveness, and making incremental changes to enhance the quality and relevance of AI-generated responses.

In NLP, prompt iteration is essential for optimizing communication between users and AI models, especially when tackling complex tasks that require nuanced and context-dependent responses. For instance, if you’re developing prompts for a customer service chatbot, the initial prompt might be too vague, leading to generic output. By iterating on the prompt, you can hone in to ensure the AI provides more accurate and helpful answers.

Overcoming Challenges in Prompt Iteration

One of the primary challenges in the prompt iteration is the potential for prompt fatigue, where the AI model becomes less responsive or accurate due to repeated exposure to similar prompts. This can lead to diminishing returns, where each subsequent iteration yields less improvement.

To overcome prompt fatigue, developers can use techniques such as prompt augmentation. This involves modifying the prompt to generate variations, thereby increasing the diversity of the output. For example, instead of repeatedly asking the AI to “describe machine learning,” you might vary the prompt to “explain how machine learning is used in healthcare” or “discuss the benefits of machine learning in finance.” These variations can help keep the AI model engaged and responsive.

Another challenge is the risk of overprompting, where the model is flooded with too much detailed prompting, leading to decreased creativity and autonomy. To mitigate this risk, developers can use soft prompting, which involves more subtle cues and implicit guidance to shape the desired replies. For instance, instead of explicitly stating every detail, you might provide a general direction and allow the AI to fill in the gaps. This approach can help maintain the AI’s creativity while guiding it towards the desired outcome.

By being aware of these challenges and using strategies to overcome them, developers can refine their prompts more effectively and improve the performance of their AI models.

The Role of Feedback in Prompt Iteration

Feedback is a critical component of prompt iteration, as it enables developers to evaluate the effectiveness of their prompts and make data-driven decisions to improve them. Feedback can take many forms, including user feedback, automated evaluation metrics, and analysis of AI-generated responses.

User feedback is invaluable, as it provides direct insights into how well the AI’s responses meet the needs of the end-users. For instance, if users consistently find the AI’s answers too technical, this feedback can guide developers to simplify their prompts.

By incorporating feedback into the prompt iteration process, developers can make targeted improvements to their prompts. This enhances the accuracy and relevance of AI-generated responses and helps identify and address biases and errors. Ultimately, leveraging feedback allows developers to create more effective prompts and improve the overall performance of their AI models.

Debugging Your Prompt for Large Language Models

You may find the reply is still off, even with multiple iterations. For instance, a vague prompt like “Tell me about marketing” might yield a broad and unhelpful response, such as a list of random marketing strategies without context. Often, this is a sign that your prompt might be inadvertently steering the AI in the wrong direction or that it’s too vague.

Large language models (LLMs) play a crucial role in debugging prompts. They leverage their advanced capabilities to handle complex tasks and provide more accurate replies.

1. Common Pitfalls

- Overly Broad Language: Terms like “explain,” “discuss,” or “describe” can produce long, winding responses if not paired with additional constraints. Experimenting with different prompt styles, such as questions or scenarios, can help effectively communicate with AI and lead to better responses tailored to specific research needs.

- Lack of Context: Telling the AI what you want is good, but telling it why or how you intend to use it can be a game-changer. Include context, target audience, and end goal.

- Contradictory Instructions: If you ask for “a deep dive” while also saying “in 50 words or less,” you’ll probably get inconsistent results. Clashing instructions make the AI unsure of which direction to prioritize.

- Unclear Audience: Failing to specify who the content is for—children, novices, industry professionals, or small business owners—leaves the AI guessing the level of detail and tone it should use.

2. Strategies for Adjusting Length, Detail, or Structure

- Be Specific About Word Count: A direct word limit can help shape the length.

- Indicate Formatting Preferences: Want bullet points? Numbered lists? Summaries? Just ask for them.

- Set the Tone: Indicate if you’d like a friendly, professional, or casual style.

- Offer Examples: You might include an example of the style or format you want. For instance, “Write it in the style of a newspaper article” or “Write it like a personal anecdote.”

3. The Mental Checklist for Debugging Prompt Effectiveness

- “Did I give enough context?”

- “Is my main request overshadowed by additional constraints?”

- “Have I contradicted myself anywhere in the prompt?”

- “Am I sure about the target audience and purpose of the content?”

Answering these questions honestly will highlight areas you can improve on. Each pass makes your prompt better and more reliable.

Measuring Prompt Effectiveness

Measuring prompt effectiveness is crucial for evaluating the success of prompt iterations and identifying improvement areas. Several metrics, including accuracy, relevance, fluency, and coherence, can be used to assess prompt effectiveness.

Accuracy measures how well the AI’s reply matches the intended output. Relevance assesses whether the response is pertinent to the prompt. Fluency evaluates the readability and grammatical correctness of the remark, while coherence examines the logical flow and consistency of the content.

Developers can also use automated evaluation metrics, such as BLEU and ROUGE scores, to quantitatively assess the quality of AI-generated responses. These metrics provide a standardized way to compare different iterations and identify which prompts yield the best results.

In addition to quantitative metrics, qualitative methods such as user feedback and expert evaluation can provide deeper insights into how effective your prompt is. User feedback offers real-world perspectives on how well the AI’s replies meet user needs, while expert evaluation can provide a more nuanced assessment of the content’s quality.

Using a combination of metrics and evaluation methods, developers can comprehensively understand prompt effectiveness. This holistic approach enables them to refine their prompts more effectively, ultimately achieving better results and enhancing the performance of their AI models.

Hands-On Exercise

It’s time to apply these ideas. Let’s illustrate how you iterate on a prompt in real-time. We’ll use the case we discussed: “Describe the concept of machine learning.”

Step 1: The Initial Prompt

Prompt:

“Describe the concept of machine learning.”

Likely Response:

You’d receive a general definition of machine learning involving references to algorithms, data, and predictions. It might look something like this:

Machine learning is a branch of AI that teaches computers to learn from data and make decisions or predictions without being explicitly programmed…

You know the drill. But suppose you realize it’s pretty technical and not entirely accessible if you’re talking to a younger audience.

Step 2: Refine for a Younger Audience

Refined Prompt:

“Explain the concept of machine learning to a 10-year-old using a fun, real-world example.”

Likely Refined Response:

Now, the AI might say something along the lines of:

Machine learning is like teaching a computer how to recognize patterns, just like you might learn which snacks you like best after trying them a few times.

The tone becomes more straightforward, and we might see a real-life case about deciding which snacks are popular in a class. It’s better, but we may need more structure.

Step 3: Add Structure and Context

Further Refined Prompt:

“Explain the concept of machine learning to a 10-year-old using a fun, real-world example, in 100 words or less, and present it as a short story.”

Likely Response:

You might get something that reads like a brief story about a child who sells lemonade and learns when to make extra based on the weather, turning it into a machine-learning analogy. Now, you have a neat, structured piece that’s also fun to read.

Step 4: Incorporate a Small Business Angle

You may be a bakery owner wanting to apply AI automation to predict cupcake sales. Let’s improve the prompt further:

Generative AI can transform small business applications by enabling iterative prompting, which deepens insights and improves the relevance and clarity of the generated results.

Refined Prompt for Small Business

“Explain the concept of machine learning to a 10-year-old using a real-world illustration of a bakery owner trying to figure out how many cupcakes to bake daily. Use around 100 words in a friendly, story-like style.”

Likely Response:The AI might respond with a short tale about a baker who notices that on sunny days, more cupcakes sell, and on rainy days, fewer are sold—mirroring how machine learning picks up on patterns in data. This honed prompt merges a simplified explanation with a direct small business scenario.

And there you have it: a straightforward idea iterated multiple times to get drastically different outputs.

Key Takeaways from Day 4

- Iteration is Everything: Don’t settle for the first response. Perfecting your prompt is how you guide the AI toward the best outcome.

- Debug Your Prompt: If you’re not getting the desired results, your prompt probably needs more specifics, clarity, or constraints.

- Use Constraints Strategically: Word limits, tone specifications, and audience context can make or break your final AI output.

- Stay Goal-Oriented: Always remember why you’re generating content in the first place. If your primary focus is AI for small businesses, angle your prompts accordingly.

Why This Matters for You

I’ve spent nearly a year solid learning about AI and what it can do, and I have 30+ years in the business world, seeing how shifts in technology either make or break companies. If you’re a small business owner (like many of my clients and readers), you don’t have time to wade through half-baked responses. You need an efficient, reliable process to leverage AI automation for tasks like content generation, customer service, or market analysis. Iterative prompting is the secret sauce that ensures you consistently get high-quality answers.

Take Action

Exercise: Write a prompt on a topic you need help with—maybe drafting a new product description or generating a social media post. Use the iterative process:

- Start broad

- Refine for length, style, and audience.

- Debug if something’s off.

- Keep enhancing until you love the output.

Share Your Results: Share the before-and-after versions of your prompts and the AI’s responses once iterated. It’s a great way to visualize how impactful a few minor tweaks can be.

Tomorrow, we’ll explore leveraging system & role instructions so you can continuously improve the quality of ChatGPT’s responses.

Stay curious and keep experimenting—better prompts lead to better results!

Thank you to Napkin.ai for the graphical images in this post. Napkin.ai is totally free, even at the mid-level service. It is in beta, so the Professional Plan won’t be free forever. I suggest you try it out now. The illustrations were created using Leonardo.ai, another free tool (with daily limits).

Finally, I am available for one-on-one coaching to enhance your prompt engineering skills. If you are interested, please book a free consultation.

Leave a Reply

You must be logged in to post a comment.