Day 1: Welcome & Foundations

January 13, 2025 – Reading Time: 10 minutes

Hi folks! Over the past few months, as I’ve been talking with friends and colleagues gathering an approach for my business, I’ve picked up on something interesting. While most people have heard of or even used ChatGPT or Claude (a.k.a LLMs), nearly everyone I talked with doesn’t know how to use LLMs to their fullest potential. And what’s more, they don’t know the basic skills of prompt engineering. There are two important points I’d like to bring up now, and then we will get started with learning!

First, watch this quick 30-second video from late 2024 by Mark Cuban. It should express the importance of prompt engineering and how learning to use LLMs will be so important to so many people over the next 3, 5, or even 10 years.

Second, I heard a saying the other day that resonated. That saying was, “AI is not going to take your job, but someone using AI will.“ The previous statement shouldn’t be scary because learning prompt engineering is as easy as learning how to write a paragraph. You need to know some essential tips and tricks and the tools within an LLM that are available to you to get the most out of these powerful tools.

Over the next couple of weeks, we’ll take a slow, steady approach to helping you feel right at home with Artificial Intelligence—particularly with large language models (LLMs). Generative AI plays a crucial role here, as it encompasses foundational concepts like prompt engineering and the effective usage of AI tools. Eventually, we will get to more advanced prompt engineering techniques. But today, we’re laying the groundwork by introducing AI tools like ChatGPT, how they work, and why they matter.

What Are Large Language Models and ChatGPT?

If you’ve spent time around the world of AI lately, you might have heard the term “LLM,” which stands for Large Language Model. These models are trained on vast amounts of text data—from books, websites, articles, and more—to learn patterns in language. The result is an AI system that can generate human-like text, answer questions, and assist with countless tasks. Crafting effective prompts is a critical skill in prompt engineering, as it determines how well AI models, like ChatGPT, respond to the clarity and specificity of input queries.

ChatGPT is an example of an LLM. It uses a technology called the “GPT” architecture (Generative Pretrained Transformer) to predict text based on context. At a high level:

- Generative: It can produce or “generate” new content.

- Pretrained: It has already been trained on massive text datasets before you even use it.

- Transformer: A specialized neural network architecture well-suited for language tasks.

When you type a question or request into ChatGPT, it uses patterns learned to predict what words and phrases might come next. It’s like a super-advanced autocomplete system that can understand and respond in context rather than just guessing random words.

How ChatGPT Works (simple prompts)

Imagine ChatGPT as a predictive text engine. You type something (the prompt), and ChatGPT scans through its “mental library” of knowledge to determine how to reply. Because it has been trained on billions of words, it can piece together insights, facts, and creative language to craft an answer. Users can expand on AI’s answers by asking follow-up questions, creating a more interactive and flexible conversation. The AI can remember previous contexts and provide more personalized responses. However, there are some essential things to keep in mind:

1. Context Is Key: The quality of your prompt (prompt engineering) heavily influences the answer.

2. LLMs are Not Human: ChatGPT isn’t conscious; it doesn’t “think” or “understand” in a human way. It simply analyzes patterns in data.

3. Information Cut-Off: Depending on the model version you’re using, ChatGPT will know about events only up to a specific date. Newer models like Perplexity.ai are starting to combine internet search with LLM capabilities. But that is getting ahead of ourselves because Perplexity is just ChatGPT or Claude behind a different-looking interface.

LLM Prompting Fundamentals

Understanding the basics of how to interact effectively with LLMs is crucial for anyone looking to harness the full potential of these powerful tools. At their core, LLM prompting fundamentals involve knowing how these models work, crafting effective prompts, and refining those prompts to achieve the desired output.

Large language models are trained on vast amounts of text data, enabling them to generate human-like responses to a wide range of inputs. They can assist with various tasks, including question answering, text summarization, and language translation. To get the most out of these models, it’s essential to craft clear, concise, and specific prompts.

When developing prompts, providing enough context is key. This helps the model understand the task at hand and generate a relevant and accurate response. Refining prompts is iterative, where you adjust the language, tone, and style to match the desired response better. This might involve tweaking the prompt multiple times to optimize the output.

By mastering these fundamentals, you’ll be well on your way to creating quality prompts that leverage the full capabilities of LLMs, whether you’re working on simple queries or complex tasks.

Understanding the basics of how to interact with LLMs effectively.

Introduction to Prompt Engineering

So, where does prompt engineering come in?

- A prompt is the text you type into ChatGPT. Think of it as instructions or a question for the AI.

- The quality of your prompt determines the quality of the AI’s response.

Prompt engineering is the art and science of crafting those prompts, so you get better results. For example, if you ask, “Tell me about space,” you might get a decent answer—but it could be very general or off-track. If instead you say, “Explain to me how black holes are formed, in simple language, and provide an example of where they might exist in our galaxy,” ChatGPT will likely give you a more detailed, on-point answer.

Optimizing prompts can significantly enhance performance when using language models, especially when fine-tuning is not feasible.

Throughout this 10-day sprint, we’ll refine our prompt engineering skills and learn to structure our prompts—from simple, single-sentence queries to more advanced instructions incorporating role-playing and context-setting. By the end, you’ll have a clear toolkit for making ChatGPT (or other LLMs) do exactly what you need using advanced prompting techniques. It’s like being the master of the universe!

Effective Prompt Writing

Crafting prompts that yield optimal results from large language models (LLMs) is an art and a science. Effective, prompt writing ensures that the model generates relevant, accurate, valuable responses. Here are some key factors to consider:

- Clarity: Your prompt should be clear and concise, providing enough context for the model to understand the task.

- Specificity: Be specific in your prompts, offering detailed instructions to guide the model toward generating a relevant and accurate response.

- Tone and Style: Match the tone and style of your prompt to the desired response. Setting the right tone is crucial, whether you need a formal explanation or a casual conversation.

- Context: Provide sufficient context to help the model understand the task and generate a response that fits the situation.

In addition to these factors, employing advanced prompting techniques can further enhance the effectiveness of your prompts. Techniques like few-shot prompting, where you provide a few examples to guide the model, can offer more context and improve the quality of the responses.

Here are some best practices for effective, prompt writing:

- Use Natural Language: Write prompts in natural language, avoiding jargon and technical terms that might confuse the model.

- Avoid Ambiguity: Steer clear of ambiguous language that could lead to multiple interpretations.

- Use Examples: Providing examples can help the model understand the type of response you’re looking for.

- Refine Prompts: Continuously refine your prompts to achieve the desired output. This iterative process is key to optimizing the model’s responses.

By following these best practices and considering the above critical factors, you can craft prompts that yield optimal results from LLMs, making your interactions with these advanced AI tools more effective and productive.

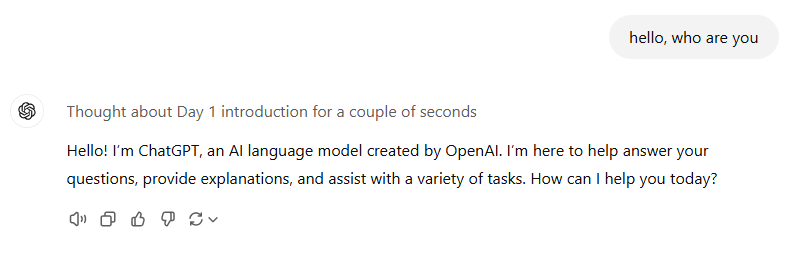

Today’s Hands-On Exercise

We’re going to keep it simple for Day 1:

- Log In: Head over to ChatGPT (or any LLM interface you can access). Chris’ advice: ChatGPT and Claude are both free. However, consider an upgrade to the $20/month level. It provides extra features and as much prompt engineering practice as you will probably ever need.

- Prompt: Type “Hello, who are you?” and press enter.

- Observe: Read the response carefully. What style does ChatGPT use? Does it sound human? Friendly? Formal?

This first interaction is more than just a quick hello. It’s your chance to see how ChatGPT responds to a straightforward prompt.

Take note of:

- The Tone of Voice: Is it conversational, technical, or a mix?

- Level of Detail: Does it give a short or long response?

- Style & Structure: Do you notice paragraphs, bullet points, or a single text block?

Keep these observations handy. Over the next few days, you’ll discover how to mold these elements by refining your prompts and using advanced prompt engineering techniques.

Wrapping Up & Looking Ahead

Congratulations on taking your first step into the world of ChatGPT and LLMs! By now, you should have a feel for:

- What is an LLM, and how does ChatGPT fit into this category?

- The basics of how ChatGPT generates responses (predicting words from context).

- Why prompt engineering—crafting prompts effectively—matters.

Tomorrow, we’ll explore the Basics of Prompting, including different types of prompts, common mistakes, and how specificity can dramatically change the answers you get. You’ll then experiment with broad vs. specific questions to see firsthand how ChatGPT’s responses evolve.

If you have any lingering questions or curiosities after today’s introduction, leave them in the comments. We’ll be able to address them as we move further along in this sprint. Remember, the key to mastering ChatGPT is practice, observation, and refinement. Please stick with it; by the end of these two weeks, you’ll be using advanced prompting techniques.

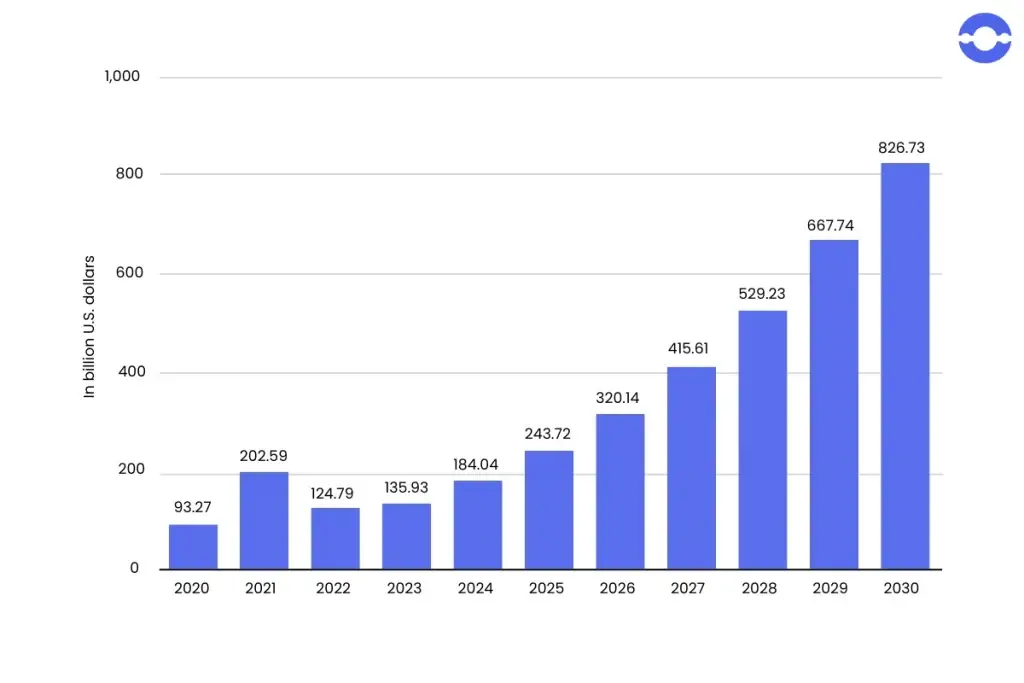

Here is a little extra to generate some enthusiasm. Look at the graph below and see where AI exists today and where it will be in just a few short years.

Graph from Bigotech.com: https://bigohtech.com/artificial-intelligence-statistics-and-trends/

Today’s New Concept

Few-Shot Prompting

Imagine you’re trying to instruct a friend how to do something new, like drawing a tree. Instead of just saying, “Draw a tree,” you begin by showing them a few examples of trees you’ve drawn before. Maybe one is a pine tree, another is an oak tree, and a third is a simple stick tree. Then you say, “Now try drawing your tree like these.”

Few-shot prompt engineering works the same way! You give ChatGPT a few examples of how you’d like it to answer before asking it to do something new. These examples help the AI understand the answer type you’re looking for, like the tone, detail, or format.

Leave a Reply

You must be logged in to post a comment.