Master prompt crafting strategies for better interactions. Discover practical tips and techniques to enhance your communication skills.

January 15, 2025 – Reading Time: 11 minutes

If you missed day 1 of the course, you can find it and all of the courses in this series on the main Learn Prompt Engineering Basics page.

Day 3—Learn to Prompt: Crafting Strategies

Mastering advanced prompts is essential for harnessing the full power of ChatGPT. A well-designed prompt transforms simple queries into impactful tools, enabling you to achieve tailored, high-quality outputs.

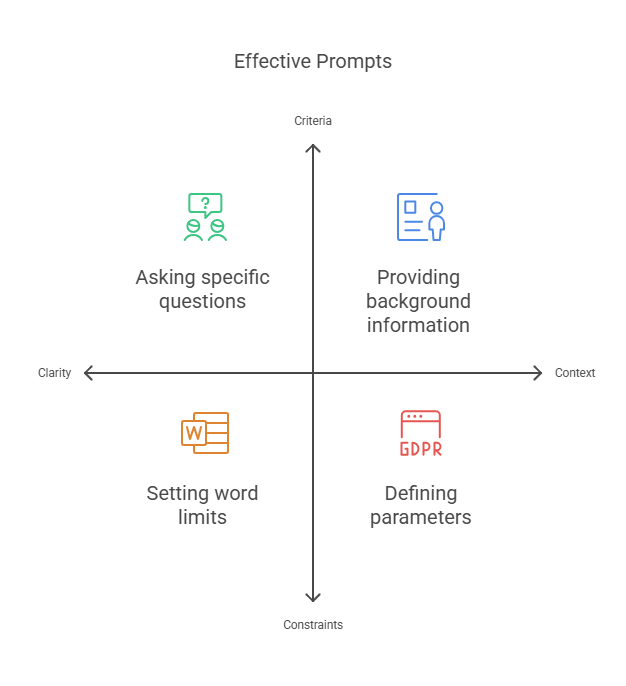

This session focuses on four key elements—clarity, context, constraints, and criteria—that underpin effective prompt engineering. It also covers practical techniques like zero-shot and few-shot prompting to refine your results.

Understanding Prompts

Prompts are the foundation of effective communication with LLMs. A well-crafted prompt can elicit a precise and relevant response, while a poorly designed prompt can lead to confusion and inaccuracies.

Understanding the nuances of prompt development is crucial for creating effective prompts that yield desired outcomes.

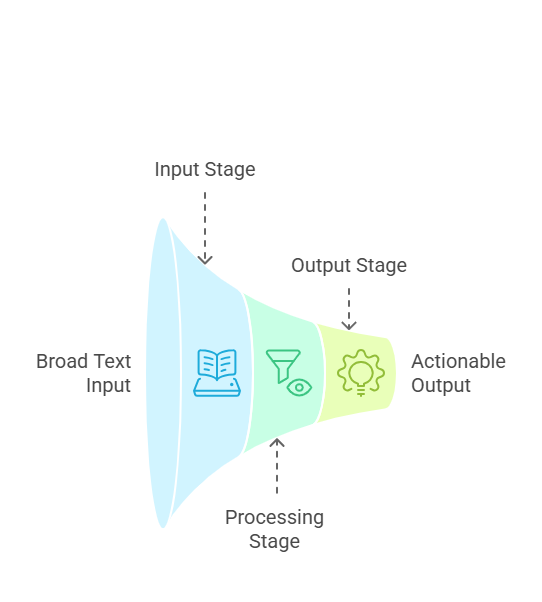

A prompt is a piece of text that is input into a language model to generate a response. Developing prompts can be simple or complex and can include various elements such as keywords, phrases, and perspective.

The goal of a prompt is to provide the language model with enough information to generate a relevant and accurate LLM answer.

There are several key elements to consider when crafting a prompt:

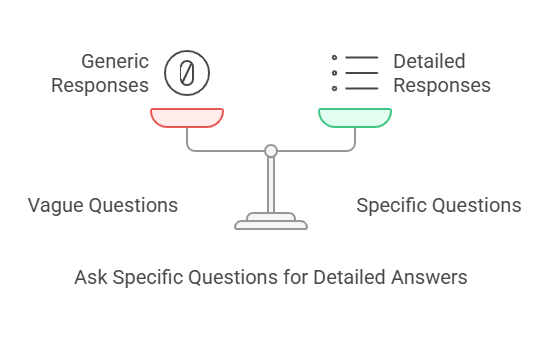

Specificity

A good prompt should be specific and clear about what is being asked. Avoid vague or open-ended prompts that may confuse the language model.

Instead of asking, “Tell me about marketing,” you could ask, “Explain three digital marketing strategies for small businesses.”

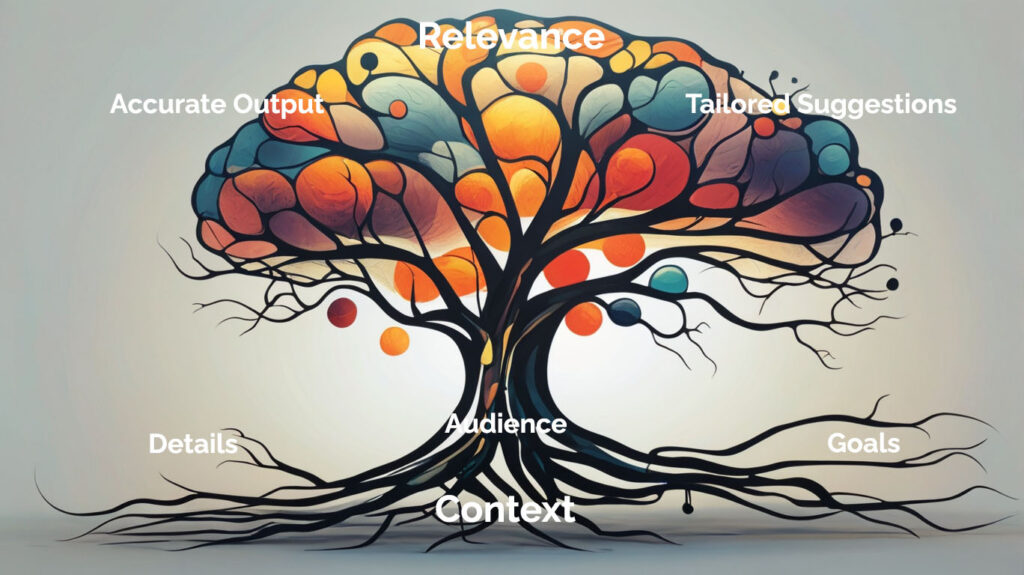

Context

Provide context to help the language model understand the prompt’s topic or subject matter. This can include background information, definitions, or relevant details.

For instance, if you’re asking for a book summary, mentioning the book’s title, author, and central themes can lead to a more accurate response.

There are several key elements to consider when crafting a prompt:

Specificity

A good prompt should be specific and clear about what is being asked. Avoid vague or open-ended prompts that may confuse the language model.

Instead of asking, “Tell me about marketing,” you could ask, “Explain three digital marketing strategies for small businesses.”

Context

Provide context to help the language model understand the prompt’s topic or subject matter. This can include background information, definitions, or relevant details.

For instance, if you’re asking for a book summary, mentioning the book’s title, author, and central themes can lead to a more accurate response.

Tone and Style

The prompt’s tone and style can influence the language model’s outcome. When crafting the prompt, consider the tone and style of the desired reply.

For example, if you need a formal business report, your prompt should reflect that tone.

Length

The length of the prompt can also impact the outcome. Shorter prompts may elicit more concise responses, while longer prompts may provide more detailed and nuanced replies.

For example, a brief prompt like “Describe the benefits of exercise” will yield a different answer than a detailed prompt like “Describe the physical, mental, and social benefits of regular exercise for adults.”

By understanding these elements and how to craft effective prompts, developers can unlock the full potential of LLMs and achieve the desired outcomes.

Elements of a Good Prompt

Clarity

Constraints are guardrails that refine the output. Whether you’re working with word limits or stylistic preferences, they help focus outcomes. For instance, “Summarize this article in 150 words, focusing only on main arguments” ensures brevity while avoiding tangential points.

Constraints help reduce ambiguity and guide ChatGPT toward replies that align with your vision.

Criteria

Defining success criteria clarifies expectations. To illustrate: “Suggest three marketing slogans under 10 words, including the phrase ‘Go Green.’”

This ensures that the response meets structural requirements and integrates key phrases or themes. Criteria are beneficial for creative tasks where multiple parameters are involved.

Using Context Effectively

Guide ChatGPT with Background Information

Providing more context, such as goals, audience details, or the project stage, ensures tailored outcomes. For instance, specifying the target atmosphere and clientele demographics enhances relevance when brainstorming names for a local cafe.

Perspective is the backbone of purposeful results—without it, replies from the LLM might lack focus or alignment with your objectives.

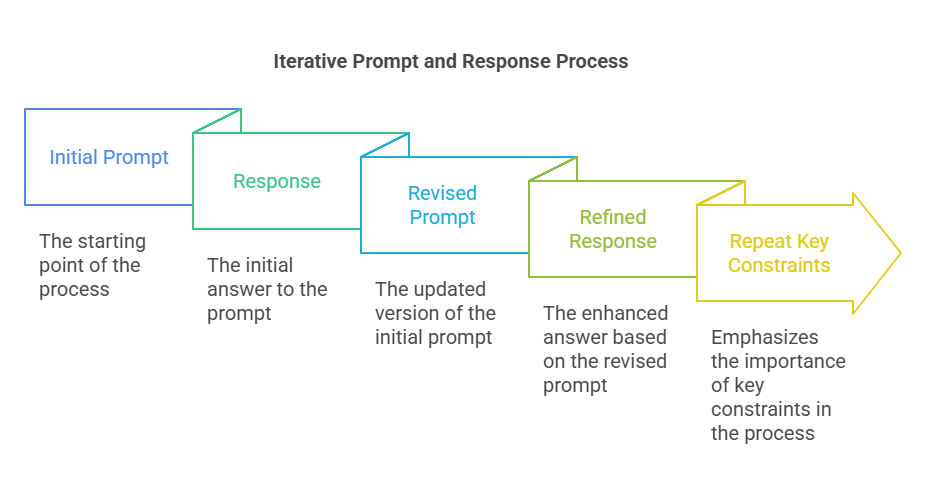

Reiterate Key Details

For follow-up queries, reiterating essential constraints helps maintain focus. For instance, repeating “under 10 words” for a slogan prompt minimizes irrelevant outputs.

Reinforcing key details ensures consistency across multiple exchanges.

Working with LLMs

LLMs are artificial intelligence designed to process and generate human-like language. They are trained on vast amounts of text data and can be fine-tuned for specific tasks and applications.

When working with LLMs, it’s essential to understand their strengths and limitations. LLMs can:

Generate Text

LLMs can generate text based on a prompt or input. This can be useful for content creation, chatbots, and language translation tasks.

You can use an LLM to draft blog posts, create dialogue for virtual assistants, or translate documents into different languages.

Analyze Text

LLMs can analyze text to extract insights, sentiment, and meaning. This can be useful for text classification, sentiment analysis, and topic modeling tasks.

For instance, businesses can use LLMs to analyze customer reviews and gauge overall sentiment towards their products.

Answer Questions

LLMs can answer questions based on their training data. This can be useful for task answering, trivia, and knowledge retrieval.

For instance, an LLM can be used to build a Q&A system that provides users with accurate information based on a vast knowledge database.

However, LLMs also have limitations. They can:

Struggle with Context

LLMs may struggle to understand situations, nuance, and subtlety. This can lead to inaccurate or irrelevant outcomes.

For instance, an LLM might misinterpret a sarcastic comment as a serious statement.

Be Biased

LLMs can be biased towards specific topics, perspectives, or language styles. This can impact the accuracy and fairness of the replies.

For instance, if a language model is trained on biased data, it may produce biased outputs.

Require Fine-Tuning

LLMs may require fine-tuning for specific tasks or applications. This can involve adjusting the model’s parameters, training data, or architecture.

Take the case where an LLM designed for medical diagnosis might need to be fine-tuned with specialized medical data to improve its accuracy.

By understanding the strengths and limitations of LLMs, developers can design effective prompts and applications that leverage the power of these models.

Advanced Prompting Techniques

Zero-Shot Prompting

Zero-shot prompting relies on clear instructions without demonstrations. For instance, you might request, “Explain the theory of relativity in simple terms,” and receive a coherent answer.

However, zero-shot prompting may lead to generic or incomplete answers for more nuanced or creative tasks.

Imagine how asking for a marketing slogan without additional guidance might produce vague results like “Innovate your future.” In contrast, few-shot prompting could include examples like “‘Think Different’ by Apple” to guide the model in crafting more targeted and impactful suggestions.

For instance: “Describe gravitational lensing in two paragraphs for a general audience.” This approach works best for straightforward tasks requiring well-defined answers. While effective for simpler goals, complex tasks often benefit from samples.

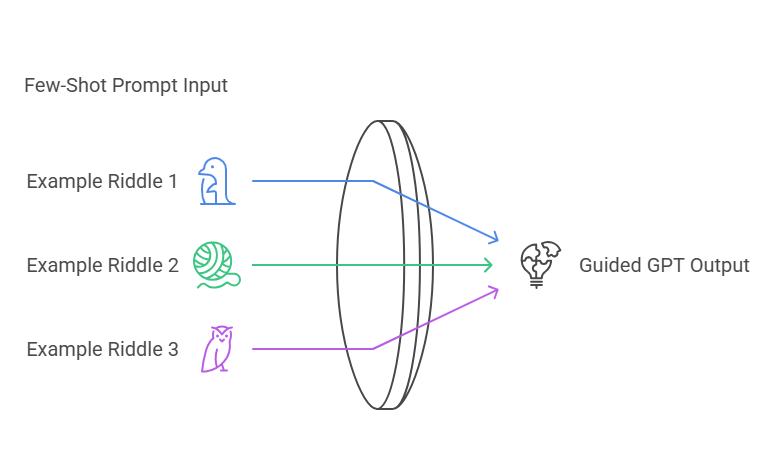

Few-Shot Prompting

Few-shot prompting includes samples to refine AI prompts for tone, structure, and style.

This approach is particularly practical for creative or technical writing because it helps set a clear template for the model to follow.

You guide ChatGPT in matching specific stylistic nuances or complex requirements by providing illustrations, ensuring the output aligns closely with your intended results. For instance, when asking for riddles, you might provide:

Example:

- “I’m a bird that cannot fly; in black and white, I slide on ice. What am I? (Answer: A penguin)”

Including examples demonstrates the expected reply format, making this method ideal for creative or nuanced tasks.

Few-shot prompting improves precision by showing instead of telling.

Limitations and Considerations

While LLMs and prompts can be powerful tools, there are several limitations and considerations to keep in mind:

Bias and Fairness

LLMs can perpetuate biases and stereotypes present in the training data. Developers must consider the potential impact of these biases on the replies generated by the model.

If an LLM is trained on data containing gender biases, it may produce biased outputs that reinforce those stereotypes.

Context and Nuance

LLMs may struggle to understand the situation, nuance, and subtlety. Developers must consider the potential limitations of the model in these areas.

For instance, a language model might not fully grasp the cultural perspective of a joke, leading to inappropriate or confusing replies.

Data Quality

The quality of the training data can impact the accuracy and relevance of the responses generated by the model. Developers must consider the quality of the data and potential sources of error.

As an illustration, if the training data contains outdated or incorrect information, the model’s outputs may be inaccurate.

Explainability

LLMs can be challenging to interpret and explain. Developers must consider the potential limitations of the model in terms of explainability and transparency.

For instance, understanding why an LLM made a particular decision can be challenging and problematic in high-stakes applications like medical diagnosis or legal advice.

By understanding these limitations and considerations, developers can design more effective prompts and applications that take into account LLMs’ strengths and weaknesses. This holistic approach ensures that AI tools are used responsibly and effectively, maximizing their potential while mitigating risks.

Hands-On Exercise

Practice crafting a few-shot prompt to demonstrate the practical application of generative AI in crafting prompts. If describing handcrafted soaps, include samples to establish a pattern:

Example:

- Lavender Dream: Infused with calming lavender oil to soothe your mind. ‘Clean up and wind down!’

Request descriptions for new soaps, ensuring each highlights an ingredient, states its benefit, and ends with a playful tagline. Additionally, apply the same format to another product category, such as candles.

To illustrate: ‘Vanilla Bliss Candle: Crafted with natural vanilla bean for a warm, relaxing aroma. Light up your moments with cozy elegance!’ This demonstrates how the approach adapts across different cases.

Observing the alignment of responses with your examples will illustrate the power of clear instructions.

Key Takeaways from Day 3

Foundational Principles Enhance AI Effectiveness

Clarity, context, constraints, and criteria form the basis for creating well-rounded prompts that drive actionable and relevant outputs.

Using these elements in combination ensures AI responses align with specific goals and audience needs.

Tailored Applications Across Fields

These principles can be applied in various professional contexts, such as marketing (creating personalized ad copy), education (designing adaptable lesson plans), and research (summarizing literature or generating hypotheses), maximizing the potential of AI tools.

Practice and Techniques Refine Results

Experimentation with zero-shot and few-shot prompting techniques sharpens prompt crafting skills, transforming basic queries into powerful collaborative tools that deliver precise and meaningful outcomes.

Tomorrow, we’ll explore strategies for refining prompts through iteration so you can continuously improve the quality of ChatGPT’s responses.

Stay curious and keep experimenting—better prompts lead to better results!

Thank you to Napkin.ai for the graphical images in this post. Napkin.ai is totally free even at the mid-level service. It is in beta, so the Professional Plan won’t be free forever. I suggest you try it out now.

Finally, I am available for one on one coaching to enhance your prompt engineering skills. If you are interested, please book a free consultation.